I read a lot of literature, studies and books about escorting and the sex industry. I was curious if you guys ( and ladies) felt that one reason why sex work is still marginalized and seen as immoral or negative is because of the way the media often portrays escorts or strippers in such a degrading way. Or do you feel it's still our deeply Victorian values about sexuality? I always watch Law and Order and shows of that nature and there hasn't been one damn show that ever showed a successful, happy escort. It's always about how they get killed, they're drug-addled, not very bright, or show the sex industry as sleazy. The other night I was watching reruns of Criminal Minds and they depicted this high-class escort who actually came from wealthy family but was 'angry' at her father for having affairs with escorts and she was going around killing clients. I thought, you know, jeez.

Or is that many people simply think sex work is so 'horrifying' that no one wants to think or believe some of us are actually well-adjusted, intelligent, successful and ACTUALLY LIKE what we do?

It doesn't matter to me what society thinks, or why they think it, but I was just curious as to what your opinions are.

I also believe that it obviously isn't men who think lowly of the sex workers. Here in Nevada, and in the West, prostitutes were treated with respect by the lonely bachelors, cowboys and miners. Only until the families started moving into areas were ladies of the night then forced to ply their trade in remote areas. In the 1950's and 1960's, it was legal here for a lady to work out of her home as a prostitute. Then once the families started moving in, they were then told to go work in brothels in remote counties. As if that was going to stop men from driving around to pay for play.

- alluringava

- 02-09-2012, 02:38 PM

- DallasRain

- 02-09-2012, 02:55 PM

good question!

I grew up in a strict bible thumping baptist home...so "hookers or johns" were what was the "scum of the earth".....it was looked down upon as a low life kind of living....involving drugs/pimps/and seedy hotels!

Little do they know...I stay in 3 or 4 star hotels....rent a nice comfy apartment in a great part of New Orleans ......have no outstanding bills...make enough money to feed my shopping habit{lol}....have freedom to travel...and have great friends in the hobby!

I grew up in a strict bible thumping baptist home...so "hookers or johns" were what was the "scum of the earth".....it was looked down upon as a low life kind of living....involving drugs/pimps/and seedy hotels!

Little do they know...I stay in 3 or 4 star hotels....rent a nice comfy apartment in a great part of New Orleans ......have no outstanding bills...make enough money to feed my shopping habit{lol}....have freedom to travel...and have great friends in the hobby!

- Iaintliein

- 02-09-2012, 03:03 PM

Absolutely the media plays a role in shaping societal views. All indications are that most of the western world was very similar in societal outlook when there was only one book widely available.

Today's non-stop parade of series glorifying the police state is no accident, I particularly dislike the one you refer to having watched one episode. Occasionally, there are books, articles, or documentaries giving a more rational treatment to the subject, but their effect is largely swamped by the sort of thing you describe. I just can't stand this show about the all knowing feds jetting around the country to solve every problem, the one I watched delt with an evil, dangerous religious cult that called itself, "The Libertarians". I'm sure using the same name as a political party I largely support was a complete accident!

Interestingly, every time my SO and I've discussed the subject (in macro terms only), she's a more outspoken proponent than I am that this is a profession that is absolutely necessary to the well being of society and should be not only legal, but respected. I'm quiet sure reducing the argument to micro terms would make for a very different view.

Like all forms of prohibition, it will last as long as it gives preachers something to preach about, politicians something to make speeches about, and LE something to justify bigger budgets. And there's little doubt many if not most wives would love to see the "competition" stamped out, even though it isn't "competition" at all.

Today's non-stop parade of series glorifying the police state is no accident, I particularly dislike the one you refer to having watched one episode. Occasionally, there are books, articles, or documentaries giving a more rational treatment to the subject, but their effect is largely swamped by the sort of thing you describe. I just can't stand this show about the all knowing feds jetting around the country to solve every problem, the one I watched delt with an evil, dangerous religious cult that called itself, "The Libertarians". I'm sure using the same name as a political party I largely support was a complete accident!

Interestingly, every time my SO and I've discussed the subject (in macro terms only), she's a more outspoken proponent than I am that this is a profession that is absolutely necessary to the well being of society and should be not only legal, but respected. I'm quiet sure reducing the argument to micro terms would make for a very different view.

Like all forms of prohibition, it will last as long as it gives preachers something to preach about, politicians something to make speeches about, and LE something to justify bigger budgets. And there's little doubt many if not most wives would love to see the "competition" stamped out, even though it isn't "competition" at all.

- alluringava

- 02-09-2012, 03:10 PM

good question!

I grew up in a strict bible thumping baptist home...so "hookers or johns" were what was the "scum of the earth".....it was looked down upon as a low life kind of living....involving drugs/pimps/and seedy hotels!

Little do they know...I stay in 3 or 4 star hotels....rent a nice comfy apartment in a great part of New Orleans ......have no outstanding bills...make enough money to feed my shopping habit{lol}....have freedom to travel...and have great friends in the hobby! Originally Posted by DallasRain

I love breaking the mold.

I worked really hard for five years and saved up a lot of money to buy and decorate my dream home. Yes, my parents know, weren't too happy about it, but they support me and are always concerned for my safety. I have an obsession about reading books about our profession and nearly always, these autobiographies ( THE PRICE by Natalie McClennan, one of the highest paid escorts in NYC) are sad themselves...the girls spiral out of control.

I worked really hard for five years and saved up a lot of money to buy and decorate my dream home. Yes, my parents know, weren't too happy about it, but they support me and are always concerned for my safety. I have an obsession about reading books about our profession and nearly always, these autobiographies ( THE PRICE by Natalie McClennan, one of the highest paid escorts in NYC) are sad themselves...the girls spiral out of control. I've had a great time and great fun in the biz and hope to work just to pay off the rest of my mortgage, stash money in my retirement accounts, and then...enjoy life

as a freelance writer.

as a freelance writer.

- daratu1

- 02-09-2012, 03:45 PM

I would agree that the media influences the view of the hobby. That and the still largely Victorian views of sex. With the media only portraying ladies as drug addled, pimped, abused, or just "sad" people expect that to be the truth. Throw in the bible thumping view and not only is it men praying on the weak, it's a cardinal sin being commited.

So yes, the media influences our views, and then the religious influence also further influences our views.

So yes, the media influences our views, and then the religious influence also further influences our views.

- Geauxtiger1970

- 02-09-2012, 03:49 PM

Unfortunately the media dictates some much of today's views! When in reality it is only what works for them and who they can drain the most. I'm not going to get on a political soap-box but take a look at the way the media handles candidates that are set to run against King Obama! First we have Pizza Man - he was under the radar for the early part of the race and then gain much success after a couple of the debates. What did the media do? They dug up as much as they could on the man and drove him out of the race. Have you heard one thing about Cain since he pulled out.

What got him eliminated? A relationship that he had outside of his marriage?

What did JFK and Clinton do in the White House?

I'm done and I hate the media?

What got him eliminated? A relationship that he had outside of his marriage?

What did JFK and Clinton do in the White House?

I'm done and I hate the media?

- Shayla

- 02-09-2012, 04:07 PM

Almost any kind of sexual acitivity is viewed somewhat as "taboo" - not just escorts. What's contradicting is how the media over sexualizes everything. We live in a very hypocritical society.

- Sweet N Little

- 02-09-2012, 04:18 PM

- Guest030824

- 02-09-2012, 04:59 PM

The shows you see on TV all have a message that the producer wants to push. I watch Swamp People and they want me to think that those Coon Asses are all dumber that hell. I watched a show the other night about some survivalists that wanted me to think they were all a little nuts. The shows about providers and clients are all the same. Law and Order is against guns and the death penalty. I have met some wonderful people that are after the same thing I am after. I have on the other hand met a few cheating liars that are out to scam me even as I spend money to help them(Missmelissa). I write that off as education to not trust every one.

- tonytiger4u

- 02-09-2012, 05:31 PM

I think the main driver for the negative view of escorts is other women. The kind of women that want a life long devoted husband. Escorts provide serious competition for them. Men just go along with the idea because it is easy. Men aren't interested in fighting over the idea without making their wives think they are seeing escorts. In the end, men will always take the easy road. It's just not worth the trouble.

For me personally, I'd have to say that my view of escorts was pretty low until I met a few. I was just ignorant I guess. Shayla is exactly right about the hypocrisy we see in society. As SNL put it, Sex Sells but we are just not allowed to be buyers? The last five books that I have read all mention the sex industry. They were biographies and one was 1776, a detailed account of the first year of the revolutionary war and there was a section about all the pleasure to be had in New York! It's everywhere and always has been.

For me personally, I'd have to say that my view of escorts was pretty low until I met a few. I was just ignorant I guess. Shayla is exactly right about the hypocrisy we see in society. As SNL put it, Sex Sells but we are just not allowed to be buyers? The last five books that I have read all mention the sex industry. They were biographies and one was 1776, a detailed account of the first year of the revolutionary war and there was a section about all the pleasure to be had in New York! It's everywhere and always has been.

- Sweet N Little

- 02-09-2012, 05:57 PM

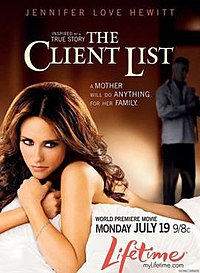

Exactly Tiger..This past Sunday this book was in the religious section of our state paper....even religion is "working it"

- charlestudor2005

- 02-09-2012, 06:23 PM

I don't think you can pin the whole thing on the media. Sure, they're a part of it. But money has always been a motive for the media. And money comes rolling in on certain stories. For instance, there's an old phrase, "if it bleeds, it leads," meaning stories with gore were the first ones reported. Likewise, scandals rake in the money for media. There's nothing sexier that a scandal in which sex is the center. Graft makes a good scandal, but sex is better. So media is driven by money, and because sex is a money-maker, it winds up in the media.

But I think you have to study something more exhaustive than just the media. Just where did these standards come from? The conduct of sex workers has been against the law since the formations of this country. Even certain acts of sex (for which money did not change hands) which were viewed as "abnormal" became criminalized. I think we can safely say the mores that came with the Puritans and other refugees/immigrants from Europe created the basis for our current laws (civil & criminal) regarding sex. We have come a long way since 1620, but we have a long way to go.

As things stand now, any statutes that still exist outlawing such things as adultery or sodomy are pretty much gone. Same sex marriage is seeing advances one state at a time.

It is interesting to me that we have this national discussion over same sex marriage, but have not yet broached the issue of sex for sale. Personally, I don't know what I think about "legalizing" prostitution. As with any issue, there are pluses and minuses. If legalized, I'm sure it would be regulated. Not sure how much I'd like that. But maybe if they just "decriminalized" it without regulation would be better, IDK.

But I think the media is just one side of this prism.

But I think you have to study something more exhaustive than just the media. Just where did these standards come from? The conduct of sex workers has been against the law since the formations of this country. Even certain acts of sex (for which money did not change hands) which were viewed as "abnormal" became criminalized. I think we can safely say the mores that came with the Puritans and other refugees/immigrants from Europe created the basis for our current laws (civil & criminal) regarding sex. We have come a long way since 1620, but we have a long way to go.

As things stand now, any statutes that still exist outlawing such things as adultery or sodomy are pretty much gone. Same sex marriage is seeing advances one state at a time.

It is interesting to me that we have this national discussion over same sex marriage, but have not yet broached the issue of sex for sale. Personally, I don't know what I think about "legalizing" prostitution. As with any issue, there are pluses and minuses. If legalized, I'm sure it would be regulated. Not sure how much I'd like that. But maybe if they just "decriminalized" it without regulation would be better, IDK.

But I think the media is just one side of this prism.

- Yowzer

- 02-09-2012, 06:48 PM

I think unfortunately society "needs" someone(s) to scorn, look down on, marginalize, vilify in order for to make people feel that they are somehow "better" than others. Like tuscon was mentioning with the swap people TV series and its depiction of southerns (I'll add rocket city rednecks, hand fishing, etc to that list). I hate to bring politics into it, but its the same way certain canidates (and news people, see faux news) talk about the other party like they are out to destroy this country on purpose.

As it relates to the hobby and the business surrounding it, I think its because no one will defend, fight back, or counter agrue anyone that gets down on it. And the favorite type of target is one that doesn't or cann't fight back.

There are some good movies with better depictions of working women. Like I mentioned in the other thread, (Klute) http://www.imdb.com/title/tt0067309/ was an early one. Butterfield 8 with Elizabeth Taylor not so much. The movie I liked during the 80s was Burt Reynold's Sharky's Machine http://www.imdb.com/title/tt0083064/ with the very hot looking (at that time) Rachel Ward as the "kept" woman running from the mob. Other movies usually have the call girl turn good at the end. Does Henry Winkler/ Michael Keaton in Night Shft (http://www.imdb.com/title/tt0084412/) count. (Guys on night shift at the morge start a call girl operation). At least it was sympathetic to the girls.

I sure there were more movies where the main character either falls in love with or mets up with a "lady of the evening" at some point in the movie. Wasn't Tom Cruise in Risky Business http://www.imdb.com/title/tt0086200/ have that plot line. Boy mets hooker, fights bad guys, falls in love. I never understood it since I thought Tom was gay (joke).

Today the big "scare" words are trafficing and underage. Kinda hard to "defend" the business when they can throw that shit out.

As it relates to the hobby and the business surrounding it, I think its because no one will defend, fight back, or counter agrue anyone that gets down on it. And the favorite type of target is one that doesn't or cann't fight back.

There are some good movies with better depictions of working women. Like I mentioned in the other thread, (Klute) http://www.imdb.com/title/tt0067309/ was an early one. Butterfield 8 with Elizabeth Taylor not so much. The movie I liked during the 80s was Burt Reynold's Sharky's Machine http://www.imdb.com/title/tt0083064/ with the very hot looking (at that time) Rachel Ward as the "kept" woman running from the mob. Other movies usually have the call girl turn good at the end. Does Henry Winkler/ Michael Keaton in Night Shft (http://www.imdb.com/title/tt0084412/) count. (Guys on night shift at the morge start a call girl operation). At least it was sympathetic to the girls.

I sure there were more movies where the main character either falls in love with or mets up with a "lady of the evening" at some point in the movie. Wasn't Tom Cruise in Risky Business http://www.imdb.com/title/tt0086200/ have that plot line. Boy mets hooker, fights bad guys, falls in love. I never understood it since I thought Tom was gay (joke).

Today the big "scare" words are trafficing and underage. Kinda hard to "defend" the business when they can throw that shit out.

- Sweet N Little

- 02-09-2012, 07:00 PM

Well said Yowzer and so true. I also think that people are getting desensitized to it as well. A movie called the client list (yes those kind of clients lol) is now being made into a TV series with the same hottie that stared in the movie, so it must have done pretty well.

- pyramider

- 02-09-2012, 08:15 PM

I love the work of the sweatshops.